Event-Driven Architecture in Spring Boot Microservices with Kafka

Modern microservices demand scalability, flexibility, and agility to handle business challenges effectively. Event-Driven Architecture (EDA) is a breakthrough model that serves as the backbone for decoupled microservices. By using Apache Kafka, developers can enable strong event streaming capabilities in their systems, creating a seamless, asynchronous platform for communication between services.

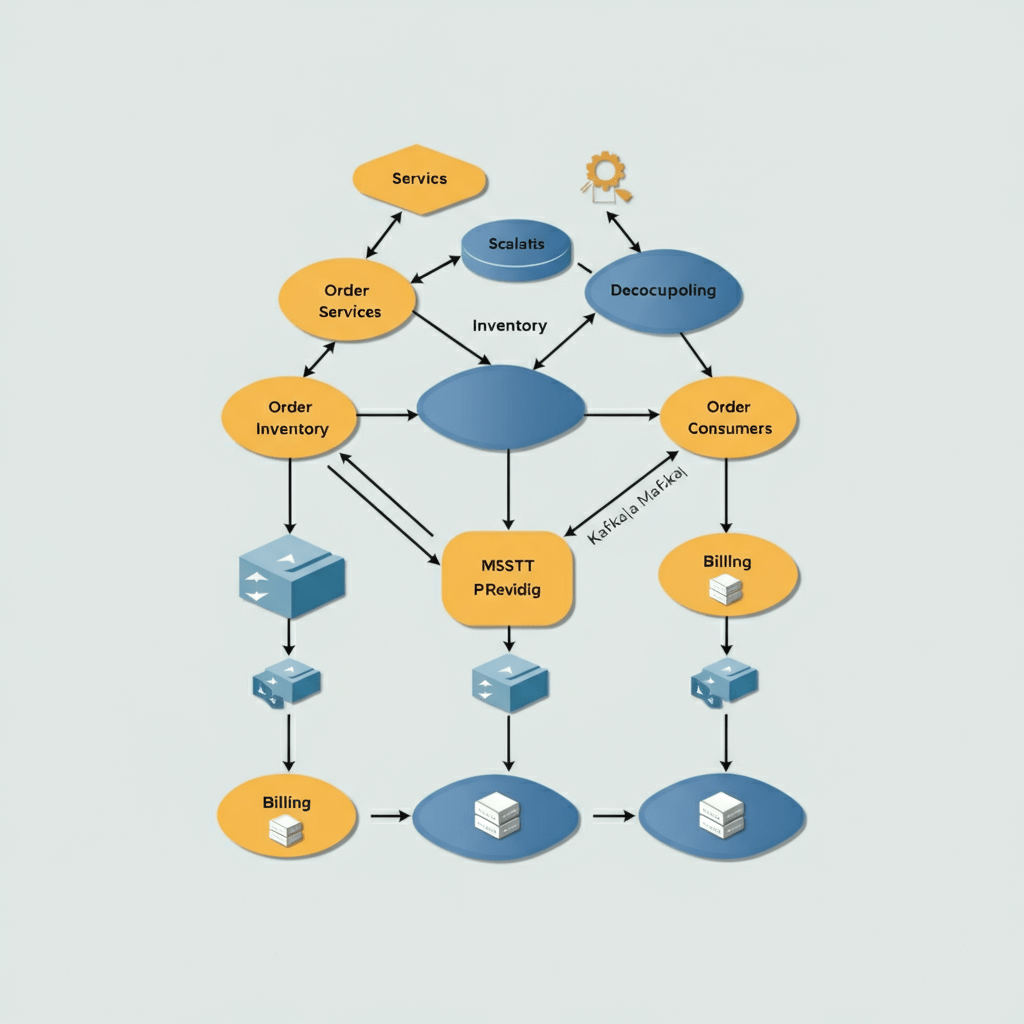

This blog will explore how Event-Driven Architecture works, why it scales, and how to implement it in Spring Boot using Kafka. We’ll cover Kafka topic configurations, producer-consumer setups, asynchronous communication, and a detailed real-world example involving Order → Inventory → Billing microservices.

Table of Contents

- What is Event-Driven Architecture and How It Scales

- Kafka Topics and Producer-Consumer Setup

- Asynchronous Service Communication

- Real-World Use Case: Order → Inventory → Billing

- Summary

What is Event-Driven Architecture and How It Scales

Definition of EDA

Event-Driven Architecture (EDA) revolves around the production, detection, and consumption of events. Instead of integrating services through direct API calls, EDA involves the use of events to trigger communication between services asynchronously.

An event is a record of a state change, such as “payment completed” or “order placed.” Think of it as a signal that broadcasts the occurrence of something, prompting other services to react.

How EDA Works in Microservices

- Events are published to a central message broker (like Kafka).

- Interested services (subscribers) consume the events and respond accordingly.

- The producer and consumers are decoupled, meaning changes in one service don’t affect others directly.

Benefits of EDA

- Scalability: EDA allows services to grow independently, managing high throughput without bottlenecks.

- Decoupling: Microservices don’t need to be aware of each other; they only interact through events.

- Fault Tolerance: Events are stored in Kafka, so consumers can process them even when services are temporarily down.

- Real-time Processing: Systems can react to changes as they happen by listening to specific event streams.

Why Kafka?

Kafka is an ideal choice for building event-driven microservices due to its distributed, fault-tolerant design and high performance. It supports millions of messages per second, leading its popularity in EDA use cases.

Kafka Topics and Producer-Consumer Setup

Kafka Architecture Overview

Kafka uses topics as central channels for event sharing. Producers send events to topics, while consumers read events from those topics.

Key Components:

- Broker: Kafka brokers manage the published events and distribute them across partitions for scalability.

- Topics: Each topic contains events of a particular type (e.g.,

order-events). - Partitions: Topics are split into partitions for parallel processing.

- Retention: Kafka retains data until it is consumed or until a set time limit is reached.

Kafka Setup in Spring Boot

Step 1. Add Dependencies

Include Kafka-related dependencies in your pom.xml for producer and consumer configurations:

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

Step 2. Define Configuration for Kafka Producer

Setup a producer in Spring Boot to publish events:

@Configuration

public class KafkaProducerConfig {

@Bean

public ProducerFactory<String, String> producerFactory() {

Map<String, Object> configProps = new HashMap<>();

configProps.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

configProps.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

configProps.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

return new DefaultKafkaProducerFactory<>(configProps);

}

@Bean

public KafkaTemplate<String, String> kafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

}

Step 3. Define Kafka Consumer Configuration

Set up consumers to read messages:

@Configuration

public class KafkaConsumerConfig {

@Bean

public ConsumerFactory<String, String> consumerFactory() {

Map<String, Object> props = new HashMap<>();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

props.put(ConsumerConfig.GROUP_ID_CONFIG, "group-id");

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

return new DefaultKafkaConsumerFactory<>(props);

}

@Bean

public ConcurrentKafkaListenerContainerFactory<String, String> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

return factory;

}

}

Step 4. Create Producer and Consumer Services

Producer:

@Service

public class ProducerService {

private KafkaTemplate<String, String> kafkaTemplate;

public ProducerService(KafkaTemplate<String, String> kafkaTemplate) {

this.kafkaTemplate = kafkaTemplate;

}

public void sendMessage(String topic, String message) {

kafkaTemplate.send(topic, message);

}

}

Consumer:

@Service

public class ConsumerService {

@KafkaListener(topics = "order-events", groupId = "group-id")

public void consume(String message) {

System.out.println("Received message: " + message);

}

}

Asynchronous Service Communication

How Async Works

Instead of synchronous API calls, asynchronous communication decouples services via event publishing and subscribing. Once an event is published, the producer continues its work without waiting.

Benefits of Async Communication:

- Improved Speed: Services don’t block on downstream responses.

- Fault Tolerance: A failed consumer doesn’t affect the producer.

Example Flow

- The Order Service publishes an event called

order_created. - The Inventory Service listens for the event and decrements the stock.

- The Billing Service listens for the same event and processes payment.

Real-World Use Case: Order → Inventory → Billing

Consider an e-commerce application with the following workflow:

- A customer places an order.

- The order affects inventory (e.g., reducing stock).

- The system processes payments.

Kafka Topics for Each Service

- Order Topic: Events such as

order_created,order_canceled. - Inventory Topic: Events like

stock_updated. - Billing Topic: Events like

payment_success.

Implementation Steps

Order Service (Producer):

public void placeOrder(Order order) {

kafkaTemplate.send("order-events", order.toJson());

}

Inventory Service (Consumer for Order/Producer for Stock):

@KafkaListener(topics = "order-events", groupId = "inventory-group")

public void handleOrder(String orderEvent) {

// Parse order and update stock

kafkaTemplate.send("stock-events", updatedStockStatus.toJson());

}

Billing Service (Consumer for Order):

@KafkaListener(topics = "order-events", groupId = "billing-group")

public void processPayment(String orderEvent) {

// Process payment

}

This decoupling allows changes in one service without impacting others, creating a scalable and maintainable architecture.

Summary

Event-Driven Architecture with Kafka turns Spring Boot microservices into scalable, fault-tolerant systems. Here are the key takeaways:

- EDA Importance: Enables decoupled, real-time communication between services.

- Kafka’s Role: Acts as a robust message broker with high throughput.

- Async Communication: Improves system speed and reliability.

- Real-world Use Case: Demonstrates how Order → Inventory → Billing services can work seamlessly.

Adopting Kafka with Spring Boot brings agility and efficiency to your application. Begin your event-driven architecture today and unlock unmatched scalability!